Workflow automation with Argo Workflows

In this small guide we will show how to use Argo Workflows with the help of our cloud. It is based on the standard Quick Guide.

Introduction

Argo Workflows is an open source container-native workflow engine for orchestrating parallel jobs on Kubernetes. Argo Workflows is implemented as a Kubernetes CRD.

You can use it for running compute intensive jobs for machine learning or data processing or run CI/CD pipelines natively on Kubernetes for example.

Install Argo Workflows

Open a terminal and run the following commands:

ARGO_WORKFLOWS_VERSION="v3.5.8" # you can check here for new versions: https://github.com/argoproj/argo-workflows/releases/

kubectl create namespace argo

kubectl apply -n argo -f "https://github.com/argoproj/argo-workflows/releases/download/${ARGO_WORKFLOWS_VERSION}/quick-start-minimal.yaml"Install the workflows cli

To better interact with Argo Workflows you can install the CLI from here. For Linux you can run the following commands:

curl -sLO https://github.com/argoproj/argo-workflows/releases/download/v3.4.7/argo-linux-amd64.gz \

gunzip argo-linux-amd64.gz \

chmod +x argo-linux-amd64 \

sudo mv ./argo-linux-amd64 /usr/local/bin/argo \

argo versionSubmit an example workflow

Submit via the CLI

Argo provides examples which can be run on the fly. With the --watch flag we can watch the lifecycle of the workflow:

argo submit -n argo --watch https://raw.githubusercontent.com/argoproj/argo-workflows/main/examples/hello-world.yamlName: hello-world-txldx

Namespace: argo

ServiceAccount: unset (will run with the default ServiceAccount)

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Mon Jul 29 10:13:33 +0200 (27 seconds ago)

Started: Mon Jul 29 10:13:33 +0200 (27 seconds ago)

Finished: Mon Jul 29 10:14:00 +0200 (now)

Duration: 27 seconds

Progress: 1/1

ResourcesDuration: 0s*(1 cpu),6s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ hello-world-txldx whalesay hello-world-txldx 18sWith this command you can list all workflows in the namespace:

argo list -n argoNAME STATUS AGE DURATION PRIORITY MESSAGE

hello-world-txldx Succeeded 2m 27s 0Then next command prints a workflow. With the @latest the latest workflow is returned:

argo get -n argo @latestName: hello-world-txldx

Namespace: argo

ServiceAccount: unset (will run with the default ServiceAccount)

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Mon Jul 29 10:13:33 +0200 (2 minutes ago)

Started: Mon Jul 29 10:13:33 +0200 (2 minutes ago)

Finished: Mon Jul 29 10:14:00 +0200 (1 minute ago)

Duration: 27 seconds

Progress: 1/1

ResourcesDuration: 0s*(1 cpu),6s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ hello-world-txldx whalesay hello-world-txldx 18sWith this last command, we can take a look at the container logs:

argo logs -n argo @latesthello-world-txldx: time="2024-07-29T08:13:50.489Z" level=info msg="capturing logs" argo=true

hello-world-txldx: _____________

hello-world-txldx: < hello world >

hello-world-txldx: -------------

hello-world-txldx: hello-world-txldx: hello-world-txldx: hello-world-txldx: ## .

hello-world-txldx: ## ## ## ==

hello-world-txldx: ## ## ## ## ===

hello-world-txldx: /""""""""""""""""___/ ===

hello-world-txldx: ~~~ {~~ ~~~~ ~~~ ~~~~ ~~ ~ / ===- ~~~

hello-world-txldx: ______ o __/

hello-world-txldx: __/

hello-world-txldx: __________/

hello-world-txldx: time="2024-07-29T08:13:51.490Z" level=info msg="sub-process exited" argo=true error="<nil>"Submit via the UI

Argo workflows also provides an UI. With this command you can take a look from your local machine:

kubectl -n argo port-forward service/argo-server 2746:2746Now you can access the UI over https://localhost:2746.

You will have a certificate error, which you have to accept manually.

Learn here how you could expose the agro server to the internet.

Please be aware that it does not have user management out of the box so anybody could access the UI.

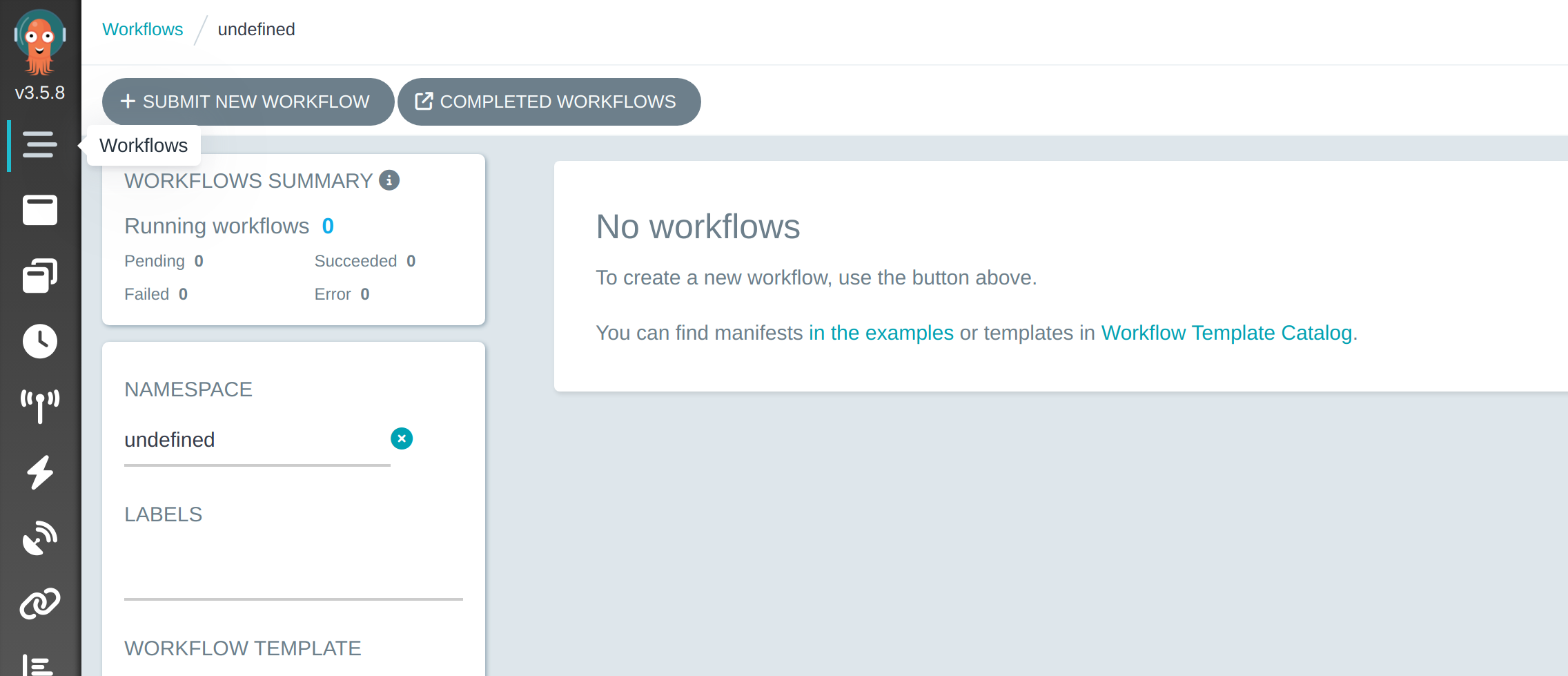

Now click on Workflows in the top left and then the + SUBMIT NEW WORKFLOW button.

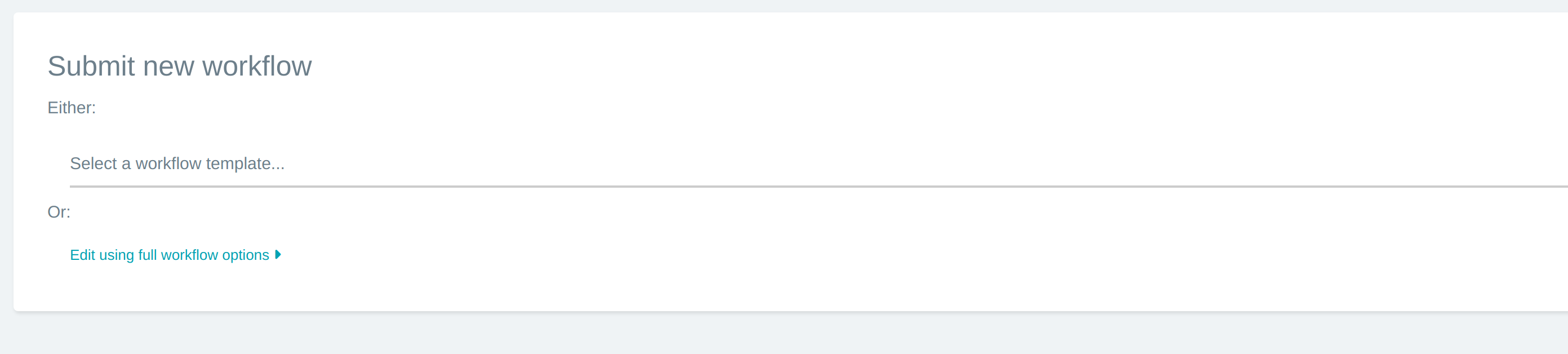

Now click on Edit using full workflow options.

There will be an example template. You will need to add an existing namespace or use the yaml below.

metadata:

name: delightful-python

namespace: argo

labels:

example: 'true'

spec:

arguments:

- name: message

value: hello argo

parameters:

entrypoint: argosay

templates:

- name: argosay

inputs:

parameters:

- name: message

value: '{{workflow.parameters.message}}'

container:

name: main

image: argoproj/argosay:v2

command:

- /argosay

args:

- echo

- '{{inputs.parameters.message}}'

ttlStrategy:

secondsAfterCompletion: 300

podGC:

strategy: OnPodCompletion

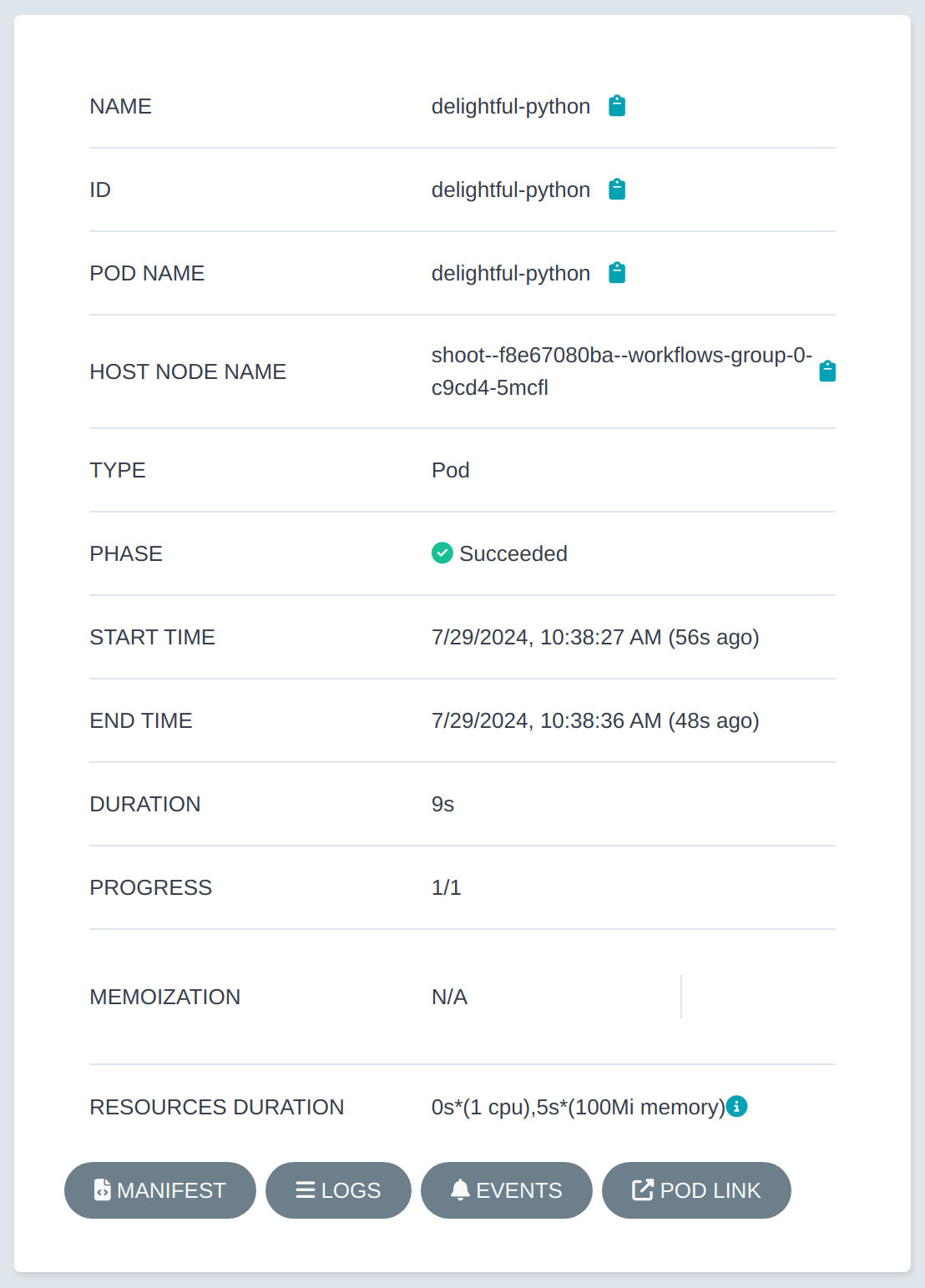

After you created the workflow you should see the new workflow and you can check the results by clicking on it.

Conclusion

Now you have learned how to quickly setup Argo Workflows and can orchestrate parallel jobs on Metal Stack Cloud. Have fun!